Next: Sums of Two Squares Up: Continued Fractions Previous: Continued Fractions of Algebraic Contents Index

Suppose

that somehow you can compute approximations to some rational number,

and want to figure what the rational number probably is. Computing

the approximation to high enough precision to find a period in the

decimal expansion is not a good approach, because the period can be

huge (see below). A much better approach is to compute the simple

continued fraction of the approximation, and truncate it before a

large partial quotient ![]() , then compute the value of the truncated

continued fraction. This results in a rational number that has

relatively small numerator and denominator, and is close to the

approximation of the rational number, since the tail end of the

continued fraction is at most

, then compute the value of the truncated

continued fraction. This results in a rational number that has

relatively small numerator and denominator, and is close to the

approximation of the rational number, since the tail end of the

continued fraction is at most ![]() .

.

We begin with a contrived example, which illustrates how to recognize a rational number. Let

The continued fraction of the truncation

We have

![$\displaystyle [2, 2, 7, 2, 1, 5, 1, 1, 1, 1, 1, 1] = \frac{9495}{3847}.

$](img1948.png)

Notice that no repetition is evident in the digits of

For a slightly less contrived application of this idea, suppose

![]() is a polynomial with integer coefficients, and we know

for some reason that one root of

is a polynomial with integer coefficients, and we know

for some reason that one root of ![]() is a rational number. Then we

can find that rational number by using Newton's method to approximate

each root, and continued fractions to decide whether each root is a

rational number (we can substitute the value of the continued fraction

approximation into

is a rational number. Then we

can find that rational number by using Newton's method to approximate

each root, and continued fractions to decide whether each root is a

rational number (we can substitute the value of the continued fraction

approximation into ![]() to see if it is actually a root). One could

also use the well-known rational root theorem, which asserts that any

rational root

to see if it is actually a root). One could

also use the well-known rational root theorem, which asserts that any

rational root ![]() of

of ![]() , with

, with

![]() coprime, has the property

that

coprime, has the property

that ![]() divides the constant term of

divides the constant term of ![]() and

and ![]() the leading

coefficient of

the leading

coefficient of ![]() . However, using that theorem to find

. However, using that theorem to find ![]() would

require factoring the constant and leading terms of

would

require factoring the constant and leading terms of ![]() , which could

be completely impractical if they have a few hundred digits

(see Section 1.1.3). In contrast, Newton's method and continued

fractions should quickly find

, which could

be completely impractical if they have a few hundred digits

(see Section 1.1.3). In contrast, Newton's method and continued

fractions should quickly find ![]() , assuming the degree of

, assuming the degree of ![]() isn't

too large.

isn't

too large.

For example, suppose

![]() . To apply

Newton's method, let

. To apply

Newton's method, let ![]() be a guess for a root of

be a guess for a root of ![]() . Then iterate

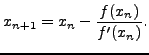

using the recurrence

. Then iterate

using the recurrence

Choosing

and

The continued fraction of the approximations

and

Truncating the continued fraction of

which evaluates to

sage: def newton_root(f, iterates=2, x0=0, prec=53): ... x = RealField(prec)(x0) ... R = PolynomialRing(ZZ,'x') ... f = R(f) ... g = f.derivative() ... for i in range(iterates): ... x = x - f(x)/g(x) ... return x

Next we run the Newton iteration, and compute the continued fraction of the result:

sage: a = newton_root(3847*x^2 - 14808904*x + 36527265); a 2.46815700480740 sage: cf = continued_fraction(a); cf [2, 2, 7, 2, 1, 5, 1, 1, 1, 1, 1, 1, 103, 8, 1, 2, 3, 1, 1]

We truncate the continued fraction and compute its value.

sage: c = cf[:12]; c [2, 2, 7, 2, 1, 5, 1, 1, 1, 1, 1, 1] sage: c.value() 9495/3847

Another computational application of continued fractions, which we can only hint at, is that there are functions in certain parts of advanced number theory (that are beyond the scope of this book) that take rational values at certain points, and which can only be computed efficiently via approximations; using continued fractions as illustrated above to evaluate such functions is crucial.

William 2007-06-01